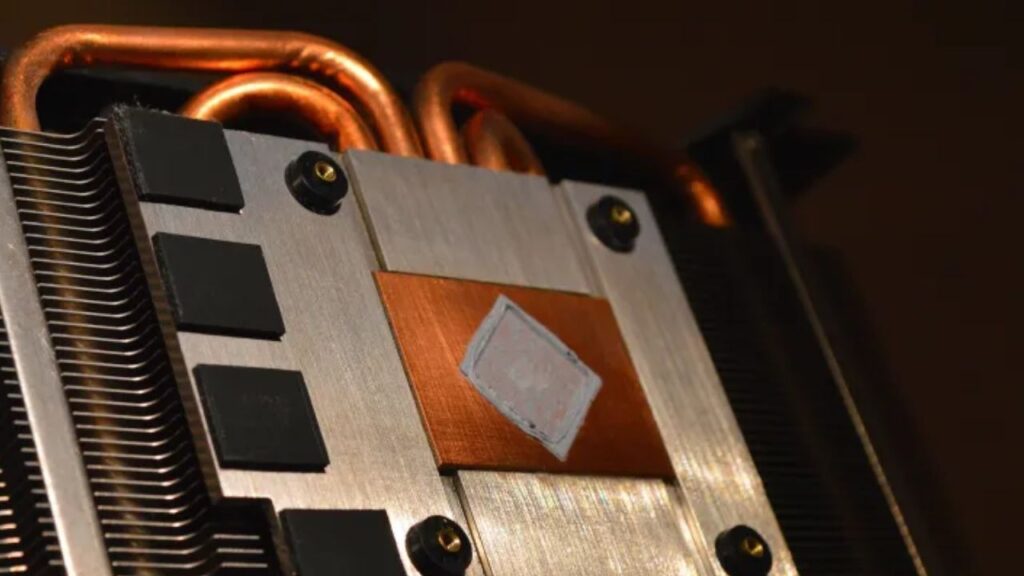

If you have ever built or opened a relatively modern gaming computer, you may have been surprised by the large size of graphics cards, even in basic and lower-end models. This is mainly due to the high power consumption of their chips and their significant cooling requirements. If you look closely, you’ll notice that most of their size is taken up by large copper and aluminum heatsinks along with their fans, while the chip and the graphics card’s PCB (Printed Circuit Board) occupy a tiny portion of the size in comparison.

This size difference has been further exaggerated in recent generations of graphics cards, with models like the RTX 4090 or the RX 7900 XTX featuring designs that occupy 3 or 4 PCIe slots in width to accommodate their massive heatsinks and attempt to control the chip temperatures. However, due to their high power consumption, this is not an easy task. But it wasn’t always like this, as graphics cards in their early days had minimal heatsinks or sometimes even lacked fans since temperatures were not usually a problem.

The need to control temperatures is what has gradually caused graphics cards to grow in size, eventually leading to triple-fan heatsinks or liquid cooling solutions to keep chip temperatures at reasonable levels and extract their maximum performance. But what is the ideal temperature for our graphics card? Do we really need such large heatsinks for our graphics cards?

Temperature Limit

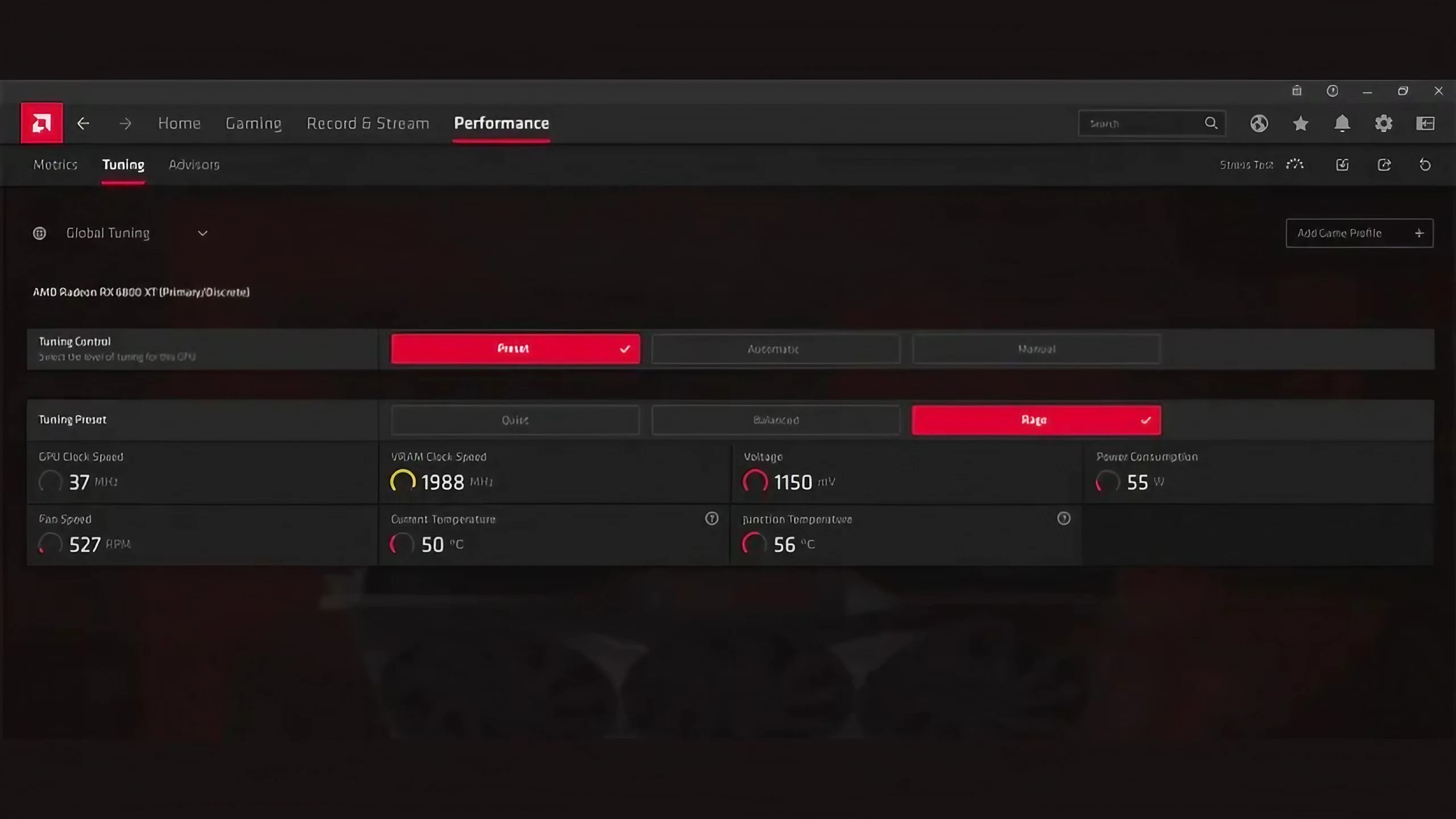

Before delving into the optimal temperature, one crucial piece of information we need to know about our graphics card is its maximum temperature. Approaching or reaching that temperature can lead to a loss of performance and potentially damage the graphics card’s chip. Generally, most maximum temperatures fall within the range of 95-110 degrees, depending on the model, but it’s essential to check the exact value for our specific graphics card to be certain.

As we’ll see later on, we always aim to keep the temperatures as far away from that maximum value as possible. In most cases, we don’t have to worry about this temperature because all graphics cards typically come with more than sufficient cooling systems to maintain safe operating temperatures. However, if we still get too close to the maximum or reach those values, we should review our fan configuration or check the condition of our thermal paste.

The Effect of Temperatures on Frequencies

The next step to determine the optimal temperature for a graphics card is to understand the effect of higher temperatures on the card. Depending on the temperature, the algorithms that control the graphics card’s frequency will push it more or less aggressively, similar to what we saw with processors in this guide.

It’s also important to note that all graphics cards react differently to temperature, starting with the fact that each brand has a different approach to interpreting temperatures when applying turbo frequencies. Additionally, with each generation, details that affect the graphics card’s behavior may change.

AMD uses a turbo and power state system similar to what we find in processors, transitioning between different states based on the load the graphics card is experiencing. This is why AMD cards have more tightly adjusted base and turbo frequencies compared to other graphics cards. The maximum frequency reached without any adjustments will be close to the turbo frequency advertised for each model, but they do not have an auto-boosting system with as advanced a curve as Nvidia.

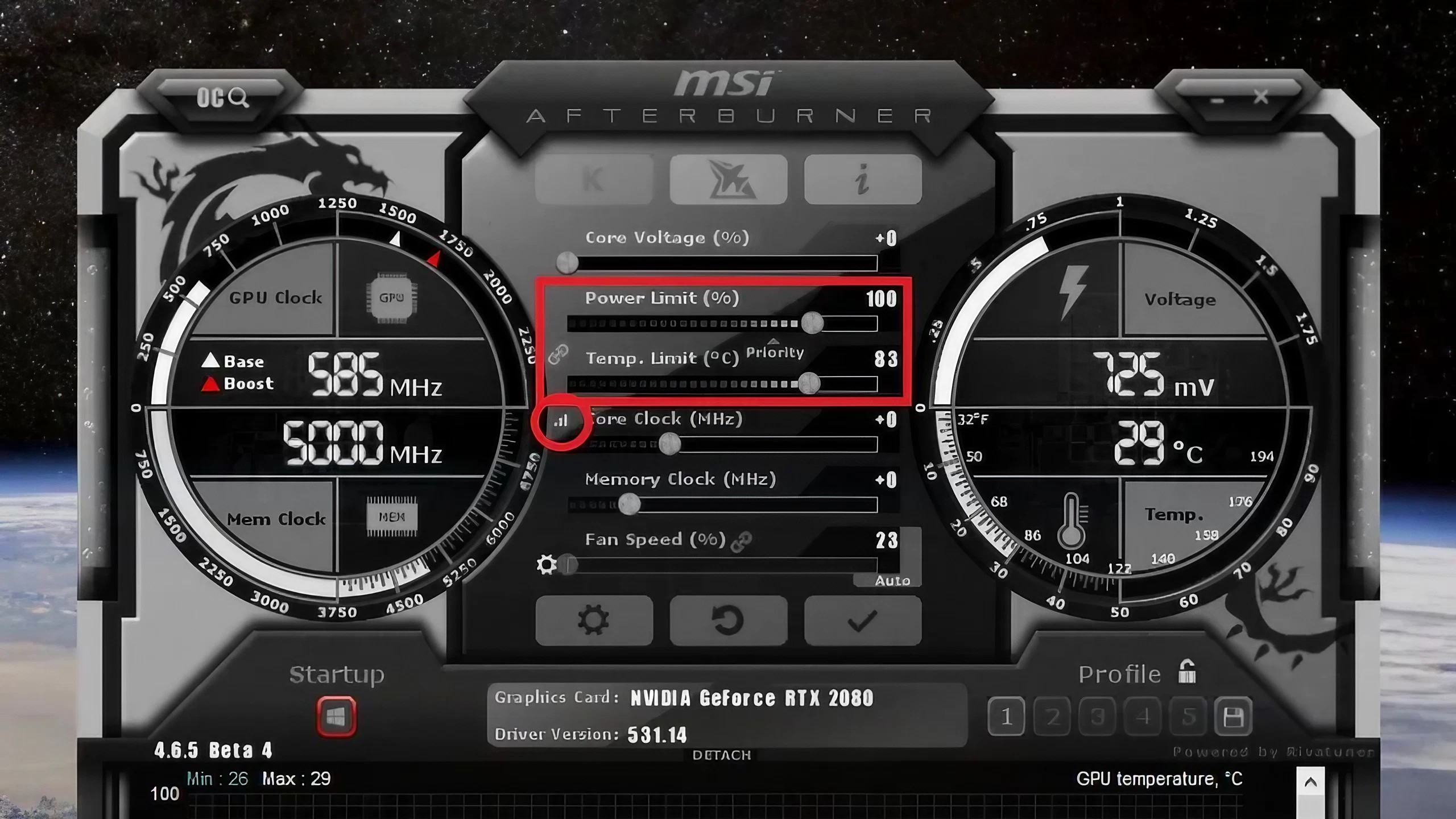

Nvidia’s case is somewhat different, especially since the GTX 1000 series, which introduced the GPU Boost technology for the first time. This technology continued to evolve in subsequent generations and has played a crucial role in maximizing the performance of Nvidia graphics cards. Nvidia graphics cards also have different power states, but these have minimal impact on the final performance they offer. The final frequencies are calculated using a curve that relates voltages on one axis and frequencies on the other, controlled by the GPU Boost algorithm mentioned earlier.

GPU Boost’s primary function is to increase the graphics card’s frequency when favorable conditions allow it. These conditions mainly include having sufficient power (adjusted “power limit”) and staying below a specific temperature known as the target temperature. This target temperature typically ranges between 80-85 degrees, and the GPU Boost will always try to approach and maintain it by adjusting the graphics card’s speed and temperature. The GPU Boost is an additional technology to the traditional turbo frequencies that graphics cards still incorporate, and it operates similarly to Intel’s Turbo Boost 3.0 or AMD’s PBO in processors. That’s why we observe Nvidia cards reaching much higher operating frequencies than the ones stated in their official base and turbo frequencies. For example, an RTX 2060 has base and turbo frequencies of 1.3 and 1.7 GHz, respectively, but in games, we will see it operating at frequencies of 1.9 GHz without any need for manual adjustments, thanks to this technology.

Recommended Temperature for Graphics Cards

As we have seen, the optimal temperatures for graphics cards are those at which they can fully utilize their frequency boosting algorithms to achieve maximum performance.

In the case of AMD, this occurs when the temperature is below the limit, similar to how it works with processors. For Nvidia cards, it gets a bit more complicated due to having two turbo frequencies: the normal turbo and the GPU Boost. To extract the maximum performance, we need to be below the target temperature used by GPU Boost, which can be manually adjusted using software like MSI Afterburner, making it a more flexible value. But that’s not the end of the story; as GPU Boost approaches the target temperature, it reduces the frequency more aggressively, the closer it gets to the target. As a result, lower core temperatures will result in higher frequencies. However, this will depend on the load of the graphics card, the model, and other factors like configuration and drivers since GPU Boost is an adaptive algorithm. Therefore, it won’t behave the same in all cases and with all graphics cards.

At the beginning of the article, we posed the question of why graphics cards have grown so much in size, and now we have all the information to answer that question. Graphics cards have increased in size not only due to their increase in power but also to maintain controlled temperatures, not just to stay away from their maximum operating values but even lower to allow boosting algorithms to work smoothly and extract maximum performance at an acceptable noise level. Unlike before when it was common to have a noisy turbine on the graphics card, forcing users to choose between noise and performance.